[ad_1]

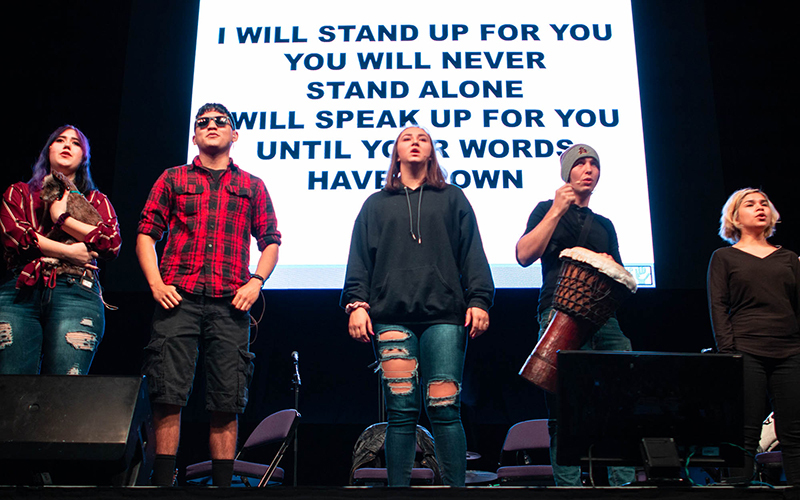

Research shows that more young Americans are experiencing mental health issues, and technology is partly to blame. A new California law requires tech companies to do more to protect children’s privacy and data online. The measure could pave the way for similar laws elsewhere. (Photo by Alexia Faith/Cronkite News)

PHOENIX – The word “crisis” is dominating headlines today about children’s mental health, and experts and advocates are pointing the finger at one factor in particular: social media.

After recent reports of the impact of platforms like Instagram on the well-being of teenagers, several groups have sued tech companies, and in September California passed the first law in the nation requiring companies to do more to protect children’s privacy and data online.

Dr. Jenny Radeski, a developmental-behavioral pediatrician who studies the interaction between technology and child development, has seen firsthand what this youth crisis looks like.

“The media is so well wired into our psychology now that you can imagine that sometimes it plays to our strengths and sometimes it plays to our weaknesses,” said Radesky, an associate professor at the University of Michigan Medical School. .

“We see this with a lot of referrals to medical centers for everything from eating disorders to suicide attempts. There is no doubt that there is a mental health crisis that existed before the pandemic – and has only gotten worse now.”

A December advisory from the U.S. Chief Medical Officer warned that more young Americans are experiencing mental health issues. The COVID-19 pandemic is to blame, but so is technology, as young people are “bombarded with messages through media and popular culture that undermine their sense of self-worth, telling them they’re not good enough, popular enough, smart enough, or rich enough.”

Tech companies tend to prioritize engagement and profits over user health, the report says, using practices that could increase children’s time online and in turn contribute to anxiety, depression, eating disorders and other problems.

During the pandemic, the amount of time young people spent in front of screens for non-school activities increased from an average of 3.8 hours per day to 7.7 hours per day.

Radesky is particularly concerned about social media applications that use algorithms to continuously deliver content to users. She worries when a child’s browsing habits or online behavior reveal something about them to the platform. For example, a user who constantly watches violent videos can tell the app that he is a little impulsive.

She noted that TikTok and Instagram use such algorithms, while Twitch and Discord require users to search for content.

“Automated systems don’t always go into service when they’re serving something that could potentially — for a child, a teenager, or even an adult — go into territory that’s not in their best interest,” Radeski said.

“We need a digital ecosystem to respect the fact that kids need space and time away from technology, and they need to engage with content that is positive and hopeful.”

As the nation searches for remedies, California has passed a law that could serve as a model for other states.

The bipartisan legislation was sponsored by Assemblymembers Buffy Weeks, D-Oakland, and Jordan Cunningham, R-San Luis Obispo. It prohibits online service companies from accessing children’s personal information, collecting or storing location data from younger users, creating a child profile, and encouraging children to provide personal information.

A task force must be established by January 2024 to determine how best to implement these policies.

The measure, announced as a first in the US, was modeled after a similar measure passed last year in the United Kingdom, where the government mandated 15 standards that tech companies, including those collecting data from children, must adhere to.

Common Sense Media, a San Francisco-based nonprofit that advocates for the safe and responsible use of children’s media, supported the event in California. Irene Lee, the organization’s policy adviser, called it a first step toward forcing tech companies to make changes to make the Internet safer for children.

Lee said companies have made “deliberate design choices” to increase engagement, such as automatically playing videos as users scroll and using algorithms to deliver targeted content to users, and argued that companies are more than capable of making changes to protect younger users.

“It’s long overdue for businesses to make some of these simple and necessary changes, such as offering young users the option to have the most effective default privacy settings and not automatically track their exact location,” Lee said.

Lee said protecting privacy goes hand-in-hand with protecting mental health, given that teenagers are extremely vulnerable to the effects of online content.

“They won’t develop critical thinking skills or the ability to distinguish between advertising and content until they’re older. This leaves them very ill-equipped to evaluate what they see and what impact it might have on them.”

Lee cited an April report by advertising watchdog Fairplay for Kids that found Instagram’s algorithm was promoting accounts with eating disorders that had amassed 1.6 million unique followers.

“Algorithms profile children and teens to serve them images, memes and videos that encourage restrictive diets and extreme weight loss,” the report said. “In turn, Instagram promotes and recommends kids’ and teens’ eating disorder content to half a million people around the world.”

The report drew scrutiny from members of Congress, who demanded answers from Meta, Instagram’s parent company, and its CEO, Mark Zuckerberg.

The Social Media Victims Law Center later filed a lawsuit against Meta on behalf of Alexis Spence, a California teenager who developed an eating disorder along with anxiety and depression when she was just 11 years old.

The lawsuit alleges that Alexis was targeted by Instagram pages that promote anorexia, negative body image and self-harm, and claims that Instagram’s algorithm is designed to be addictive and specifically targeted at teenagers.

It’s one of several similar lawsuits against tech companies filed after Frances Haugen, a former Meta product manager, released internal documents in 2021 that showed the company knew about malicious content being promoted by its algorithms.

In a September 2021 statement, Meta said it had taken steps to reduce harm to young people, including introducing new resources for those struggling with body image issues; updating the policy on the removal of graphic content related to suicide; and launching an Instagram feature that allows users to protect themselves from unwanted interactions to reduce bullying.

“We have a long history of using our research … to inform changes in our programs and provide resources for the people who use them,” the company said.

And in a Facebook post last year, Zuckerberg said: “The reality is that young people are using technology. … Technology companies must design experiences that meet their needs while keeping them secure. We are deeply committed to doing industry-leading work.”

Dylan Hoffman is the executive director of TechNet, a network of tech executives representing about 100 companies. While the organization supports protecting children online, it had some concerns about the new California measure, he said.

One provision requires companies to assess the age of child users “with an acceptable level of certainty,” and Hoffman is concerned that those verification steps could affect adults seeking legal content.

“The bill defines children as anyone under the age of 18, which can create some problems,” he said, noting that TechNet tried to push for the bill to change the definition of “children” to include users under 16. “What does it mean for companies to determine the age of their users? Do they have to do stricter and stricter age and identity verification of their users?”

This, he said, “could have a number of consequences” — not only in terms of access for children, but also for adults.

Radeski hopes that as conversations continue about the pros and cons of social media use, the media will see children’s mental health as an issue that everyone needs to address, not just parents.

“My hope is that in the future, as the press continues to cover this … they will really start to shift more attention to the changes in the technology environment and what technology companies can do better,” she said.

Earlier this year, federal legislation was introduced in Congress that calls on tech companies to introduce new safeguards for children. But with the Children’s Online Safety Act still pending, Radeski said the California measure will serve as a test for companies and youth.

“This group of California kids will see: How well does technology do this? It all depends on law enforcement and technology companies really listening to their subsidiary design teams,” she said.

Ultimately, Radeski added, companies also need to start looking at such laws not as regulation, but “rather as cleaning up this neighborhood that’s filled with trash.”

[ad_2]

Source link